Bob Mackin

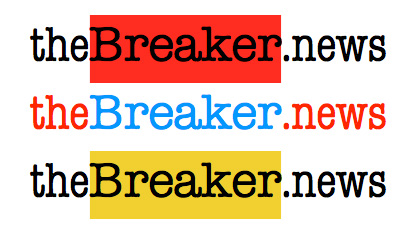

YouTube’s parent company says it is taking action to stop deepfake ads that portray Canadian finance minister Chrystia Freeland flogging a get rich quick scheme.

Liberal Finance Minister in deepfake videos seen on YouTube (YouTube)

theBreaker.news reported June 11 about the ads, found inadvertently May 31 on YouTube. The clips show Freeland, who is also the Deputy Prime Minister to Justin Trudeau, in ads that are packaged as reports on TV news channels. But they were generated by artificial intelligence (AI). A spokesperson for Freeland called the videos, and associated websites, fake and rife with false and misleading information. (SEE THE DEEPFAKE CLIPS BELOW.)

A Google spokesperson, citing company policy, provided comment on condition of anonymity.

“Protecting our users is our top priority and we have strict policies that govern the ads and content on our platform,” said the Google statement. “These scams are prohibited and we are terminating the ads accounts and channels behind them. We are investing heavily in our detection and enforcement against scam ads that impersonate public figures and the bad actors behind them.”

The source video for one of the Freeland clips was an April 7 Toronto news conference where she ironically announced $2.4 billion taxpayer funding to boost Canada’s AI sector. Last November, Google agreed to pay $100 million a year, plus inflation, to Canadian media outlets in order to be exempt from the Liberal government’s Online News Act. The controversial law is also known as a tax on web links.

Google says it has long-prohibited the use of deepfakes and other forms of doctored content that aim to deceive, defraud or mislead users about political issues. It requires user verification and employs human reviewers and machine learning to monitor and enforce polices. Of the 5.5 billion ads it removed last year, 206.5 million contravened the company’s misrepresentation policy. It also suspended more than 12.7 million advertiser accounts.

Mac Boucher, an AI content generation expert and partner in L.A.-based KNGMKR, spoke June 12 at the Trace Foundation and Vancouver Anti-Corruption Institute’s Journalism Under Siege conference in Vancouver.

Boucher showed a reel of deepfake videos made with the images and audio clips of celebrities such as Christopher Walken and Morgan Freeman. He cited the popular PlayHT program as an example.

“You feed it a video file or an audio file that I just rip off the internet. It takes a second to process and sometimes it messes up,” Boucher said. “But, then essentially, you have a TTS model, which is text-to-speech, where you can type anything you want, you can add sentiment to it, happy, sad, fearful, surprised, etc. It will start to be able to generate, oftentimes pretty bad generations, but it takes a little bit of tuning and tweaking and editing to make it come out a lot more naturally.”

Boucher, the brother of musician Grimes, said AI is cheap to produce. Disinformation is the drawback, but that is most effective when “people no longer believe in a system that is not really working in their best interests, or at the appearance that is in their best interests.”

“The internet is probably just going to look a lot like Times Square as of right now, which is not really a place that people spend a lot of time. It just attracts tons of tourists and people just passing through,” Boucher said.”Then there’s going to be small neighbourhoods that have standards of excellence for whatever the data.”

Mac Boucher (LinkedIn)

Some governments are slowly pondering regulation of the fast-evolving technology. California state senator Bill Dodd tabled the AI Accountability Act to regulate AI use by state agencies, including transparency of its use and push for state-funded AI education.

The Canadian Anti-Fraud Centre (CAFC) warned in a March bulletin that deepfakes use “machine-learning algorithms to create realistic-looking fake videos or audio recordings. This is most commonly seen in investment and merchandise frauds where fake celebrity endorsements and fake news are used to promote the fraudulent offers.”

In May, the U.S. Federal Communications Commission proposed a $6 million fine for political consultant Steve Kramer who was behind robocalls two days prior to the first-in-the-nation presidential primary in New Hampshire. Those robocalls featured deepfake audio using President Joe Biden’s voice to encourage citizens to abstain from the primary and save their vote for the November presidential election.

Kramer was also arrested in New Hampshire on bribery, intimidation and voter suppression charges.

Toronto-based Marcus Kolga of DisinfoWatch.org is concerned that the technology is advancing so rapidly that deepfake videos could become undetectable and ultimately be used by bad actors to cause financial manipulation and geopolitical disruption on a mass-scale.

“This technology is only improving and it’s improving not yearly, it’s improving every month,” Kolga said.

Support theBreaker.news for as low as $2 a month on Patreon. Find out how. Click here.